TL; DR: The Generative AI Precision Anti-Pattern

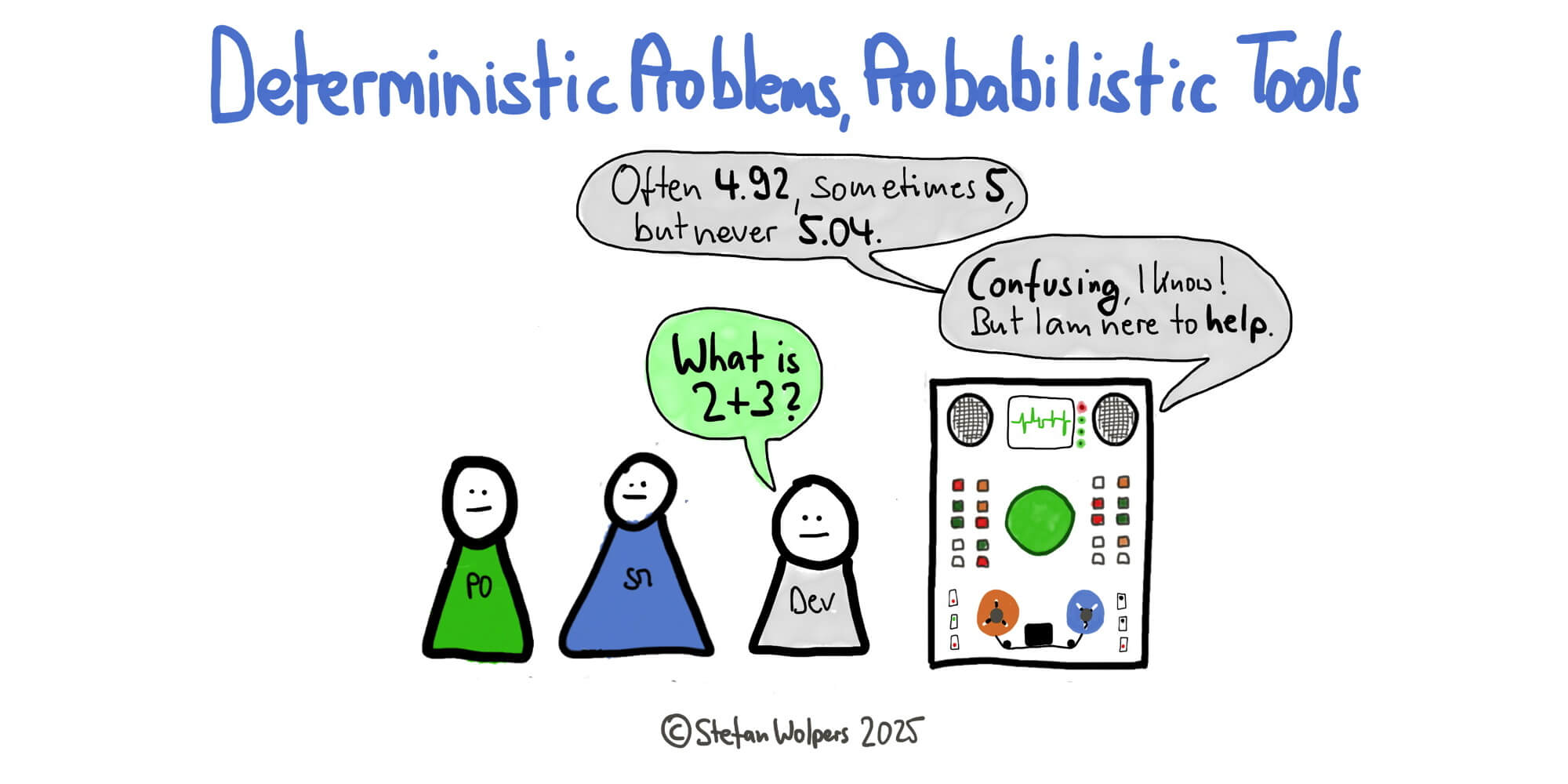

Here’s another one for your collection: The Generative AI Precision Anti-Pattern, where organizations wield LLMs like precision instruments when they’re probabilistic tools by design. Sound familiar? It’s the same pattern we see when teams cargo-cult agile practices without understanding their purpose.

LLMs excel at text summarization and pattern recognition in large datasets, which helps analyze user feedback or generate documentation drafts, but can they be used for deterministic tasks like calculations? If you are not careful with matching your problem to the right tool, you end up building issues of all kinds into the foundation of your product.

What can you do about it? Spoiler alert: The fix isn’t better prompting, but architectural discipline and tool-job alignment.

Your LLM Isn’t Broken; You’re Using It Wrong

There’s a new and tempting anti-pattern emerging in product development: using generative AI for tasks that require 100% accuracy and consistency. While LLMs are potent tools for ideation, pattern identification, or summarization, treating them like calculators or compilers is a misuse of the technology.

This approach introduces systemic unreliability into your product and is a misunderstanding of what the tool is designed for. For any deterministic problem, this strategy is not just inefficient but also a direct path to failure.

The Core of the Anti-Pattern

At its heart, this anti-pattern stems from a fundamental mismatch between the problem and the tool:

- A deterministic problem has one, and only one, correct answer. Given the same input, you must get the exact same output every time. Think of compiling code, running a database query, or calculating a sales tax. It’s governed by strict, verifiable logic.

- A probabilistic system, like an LLM, operates on statistical likelihood. It predicts the most plausible sequence of words based on its training. Its goal is to generate a convincing, human-like response, not a verifiably true one.

Using an LLM for a deterministic task is like asking an author to file your taxes. You’ll probably receive a document that is well-structured and sounds authoritative. However, the probability of it being numerically correct and compliant with tax law is low. The same effect shows when you use a tool built for creativity and fluency to do a job that requires rigid precision.

Failure Modes You Will Encounter

When teams fall into this trap, the consequences manifest in predictable ways that undermine product quality and user trust.

Plausible but Incorrect Outputs (Hallucinations)

The most common failure is when the LLM confidently provides an incorrect answer. It might generate code that is syntactically perfect but contains a subtle, critical logic flaw. Or, it might answer a user’s calculation query with a number that appears correct but is factually incorrect. The model isn’t lying; it’s just assembling a statistically probable sequence of tokens, without any grounding in factual accuracy.

Inconsistent Results

A core principle of any reliable system is repeatability. An LLM, by design, violates this. Due to its probabilistic nature and sampling techniques, asking the same question multiple times can yield different answers. This level of unreliability is unacceptable for any process that requires consistent, predictable outcomes. You cannot build a stable feature on an unstable foundation.

Unverifiable “Reasoning”

When an LLM explains its “chain-of-thought,” it’s not exposing a formal proof. It’s generating a narrative that explains its probabilistic path to the answer. This justification is itself a probabilistic guess and cannot be formally audited. Unlike a compiler error that points to a specific line of code, an LLM’s explanation offers no guarantee of logical correctness.

Use the Right Tool for the Job

The observation that using probabilistic tools to solve deterministic problems is challenging doesn’t mean generative AI isn’t a revolutionary technology. By all means, it excels at tasks involving creativity, summarization, brainstorming, and natural language interaction, proving its worth for agile practitioners daily.

The solution to this fundamental capability-requirement mismatch isn’t to try to “fix” the LLM’s accuracy on deterministic tasks. It’s to recognize the categorical difference between the two problem types and architect your systems accordingly:

- For precision, accuracy, and verifiability, rely on deterministic tools: spreadsheets, compilers, or database management systems.

- Hence, view LLMs as complementary tools, not replacements. The most effective applications will combine the LLM’s pattern-recognition capabilities with traditional, logic-based systems that handle the precise computation and verification.

Consequently, for product and engineering teams, the solution is simple: match the tool to the job. Substituting a deterministic model with a probabilistic model isn’t an innovation; it’s an anti-pattern that introduces unnecessary risk and technical debt into your product.

Conclusion: The Generative AI Precision Anti-Pattern

This Generative AI precision anti-pattern exposes a systemic issue in how we adopt emerging technologies: We rush toward the shiny object without examining our actual constraints.

Just as Scrum fails when teams ignore its empirical foundations, LLM implementations fail when we ignore their probabilistic nature. The organizations thriving with AI aren’t the ones forcing it everywhere; they’re the ones practicing disciplined product thinking. They understand that breakthrough technology requires breakthrough judgment about when not to use it.

🗞 Shall I notify you about articles like this one? Awesome! You can sign up here for the ‘Food for Agile Thought’ newsletter and join 40,000-plus subscribers.