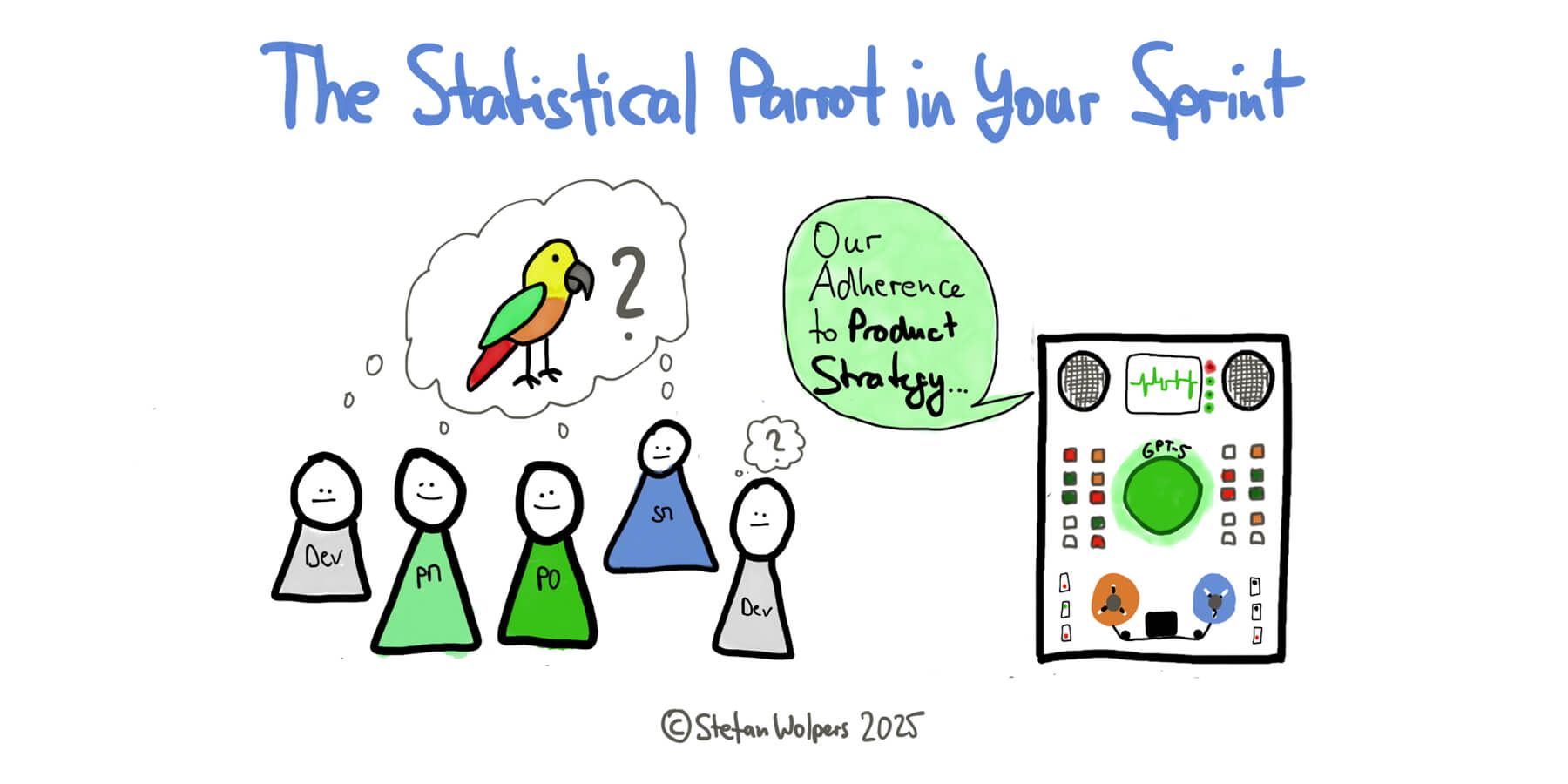

TL; DR: The AI Parrot in the Room

Your LLM tool doesn’t think. It’s a statistical AI parrot: sophisticated and trained on millions of conversations—but still a parrot. Teams that fail with AI either don’t understand this or act as if it doesn’t matter. Both mistakes are costly.

The uncomfortable truth in Agile product development isn’t that AI will replace your team (it won’t) or that it’s useless hype (it isn’t). Most teams use these tools on problems that need contextual judgment, then accept outputs without the critical thinking Agile demands.

Cutting Through the Noise

Both extremes are wrong: those predicting the end of human teams and those dismissing generative AI as a fad. They misunderstand what large language models are.

These systems recognize and regenerate patterns from data—your user feedback, Sprint metrics, market research, and their training data. When you ask a model to draft documentation, it computes likely responses; it doesn't understand your product vision. Calling this "thinking" is marketing, not cognition.

The Fundamental Mismatch

Here's the stumble: applying pattern-matching tools to problems that require context and human understanding.

User stories are tokens for human discussion—the why, what, how, and who. They emerge from collaboration around specific user needs and business context. Your parrot returns pattern-based responses from available data. That mismatch creates two outcomes: you either work in a bubble where generic patterns seem fine, or you lower the bar and accept "good enough" as your definition of done.

Consider a Product Owner using AI to analyze user research. The tool can scan thousands of entries, surface recurring themes, and flag pain points—useful for discovery. It cannot grasp unspoken needs, market dynamics, or strategic priorities. It finds patterns; it doesn't decide which ones deserve a user story. That choice depends on value, feasibility, and strategy.

Where Pattern Matching Excels (And Where It Doesn't)

Many Agile activities are pattern-based and benefit from AI:

- Analyzing user feedback to surface recurring themes,

- Detecting systemic issues across bug reports,

- Summarizing research documents or interview transcripts,

- Generating initial drafts of documentation or test cases,

- Identifying velocity trends and correlations in Sprint data.

These tasks separate signal from noise—what statistical parrots do well. The risk is stretching that use into work that needs human judgment.

Facilitation, conflict resolution, strategic prioritization, and stakeholder management require emotional intelligence, cultural awareness, and adaptive thinking. Future models may simulate parts of this. Whether simulation equals understanding is unsettled—staking your product on that debate is risky.

Turning the Statistical AI Parrot into Your Co-Pilot Model in Practice

Addy Osmani captures the balance:

The future of coding is likely human+AI, not AI-alone. Embrace the helper, but keep your hands on the wheel and your engineering fundamentals sharp.

A Scrum Master who uses AI to analyze Retrospective notes can spot patterns—say, teams mentioning "technical debt" also show declining velocity. Facilitating the Retrospective still means reading the room, creating psychological safety, and guiding problem-solving.

Product Owners can use AI to process large volumes of feedback and analytics, surfacing patterns humans might miss. These insights support continuous discovery by revealing hidden correlations. Turning those patterns into user stories, tokens for human discussion, still depends on context, strategy, and value.

The Nuanced Reality

Critics note that humans rely on patterns too—experience, heuristics, mental models. True. The difference is knowing when patterns fail, adapting to context, and creating novel solutions. Whether that gap will close is unclear, but it exists today.

AI capabilities are evolving. The practical risk isn't sudden consciousness. It's a slow slide to lower standards, treating probabilistic outputs as decisions that need judgment. We already see this when teams accept AI text as finished work instead of a starting point for human refinement.

Advantage comes from clear integration: use pattern analysis to augment people while keeping humans responsible for judgment, strategy, and relationships.

Teams that succeed use the AI parrot to remove repetitive analysis, not to replace collaborative story creation. Agile empiricism still needs human inspection and adaptation: The parrot supplies analysis, and humans supply judgment and action.

As capabilities expand, the line between pattern-matching and understanding may blur. Until then, teams need practical approaches: know today's limits and stay open to change.

The question isn't whether to use AI, but how to use it without losing what makes Agile work: human collaboration, contextual judgment, and the ability to adapt when patterns fail.

Conclusion: The Choice Ahead

Teams that thrive with AI won’t be the ones that adopt it first or avoid it longest. They’ll be the ones who understand what they’re using: pattern-matching tools that analyze well but lack judgment, context, and the ability to adapt when patterns fail.

The risk is slow drift: accepting AI-generated user stories without collaborative refinement, treating pattern analysis as strategy, or letting probabilistic outputs replace human judgment. Teams that keep clear boundaries, use AI for analysis, and keep people responsible for decision-making capture the benefits without weakening their agility.

The question for your next Sprint isn’t whether AI belongs in Agile. The question is whether you can use it purposefully while preserving the critical thinking and collaboration that deliver value to users. Use AI as a helper; keep humans in charge.

🗞 Shall I notify you about articles like this one? Awesome! You can sign up here for the ‘Food for Agile Thought’ newsletter and join 40,000-plus subscribers.