TL; DR: How Your Advantage Becomes Your Achilles Heel

AI can silently erode your product operating model by replacing empirical validation with pattern-matching shortcuts and algorithmic decision-making. This article on product development AI risks, along with its corresponding video, identifies three consolidated risk categories and practical boundaries to maintain customer-centric judgment while leveraging AI effectively.

📺 Watch the video now: Product Development AI Risks: When Your Leverage Becomes Your Liability.

Three Fundamental Product Development AI Risks

AI is tremendously helpful in the hands of a skilled operator. It’s a massive lever at your disposal.

However, ignoring fundamental risks can be equally damaging. Your obligation? Skill yourself up. Let me break down the core risk categories from a product perspective.

Three Fundamental Risks

Most discussions of AI risks in product work list multiple overlapping symptoms rather than distinct root causes. I have identified three fundamental failure patterns that matter:

1. Validation Shortcuts: When You Stop Running Experiments

This risk combines what often gets called “automation bias” and “value validation shortcuts,” but they are the same problem: Accepting AI output without empirical testing.

Remember turning product requirements into hypotheses, then test cards, experiments, and, finally, learning cards? Large language models excel at this because it’s a pattern-matching task. They can generate plausible outputs fast.

The problem is obvious: You start accepting the hypothesis from your model without running proper experimentation. Why not cut corners when management believes AI lets you deliver 25-50% more with the same budget?

So you skip validation. AI analyzed thousands of support tickets and “told you” what customers want. It sounds data-driven. But AI optimizes within your existing context and data. Practically, you are optimizing your bubble without realizing there might be tremendous opportunities outside it. AI tells you what patterns exist in your past. It cannot validate what doesn’t exist yet in your data.

2. Vision Shortsightedness: Missing Breakthrough Opportunities

AI optimizes locally based on your context and available data. It is brilliant at incremental improvement within existing constraints, but fails to identify disruptive opportunities outside your current market position.

“Product vision erosion” thus happens gradually. Each AI recommendation feels reasonable. Each optimization delivers measurable results. But you’re climbing the wrong hill: Getting better at something that may not matter in eighteen months.

This risk is all about AI’s inherent backward-looking nature, not how you use it.

3. Human Disconnection: When Algorithms Replace Judgment

Three commonly listed risks converge here: you have let AI mediate your connection to the humans whose problems you are solving:

- Accountability Dilution: When AI-influenced decisions fail, who is responsible? The Product Owner followed “data-driven best practices.” The data scientist provided an analysis. The executive mandated AI adoption. Nobody owns the outcome.

- Stakeholder Engagement Replaced: Instead of direct dialogue, you are using AI to analyze stakeholder input. You lose the conversation, the facial expression, the pause that reveals what someone really means.

- Customer Understanding Degradation: AI personas become more real than actual customers. Decisions become technocratic rather than customer-centric.

These aren’t three problems. They’re symptoms of one disease: The algorithm now stands between you and the people you’re building for.

Why These Risks Emerge

We can base these risks on three categories:

- Human factors: Cognitive laziness. Overconfidence in detecting AI problems. Fear of replacement driving over-adoption or rejection.

- Organizational factors: Pressure for “data-driven” decisions without validation. Unclear boundaries between AI recommendations and your accountability.

- Cultural factors: Technology worship. Anti-empirical patterns preferring authority over evidence.

The Systemic Danger

When multiple pressures combine, here, in a command-and-control culture under competitive pressure, while worshipping technology, questioning AI becomes career-limiting.

The result? Product strategy is transferred from business leaders to technical systems, often without anyone deciding it should.

Your Responsibility

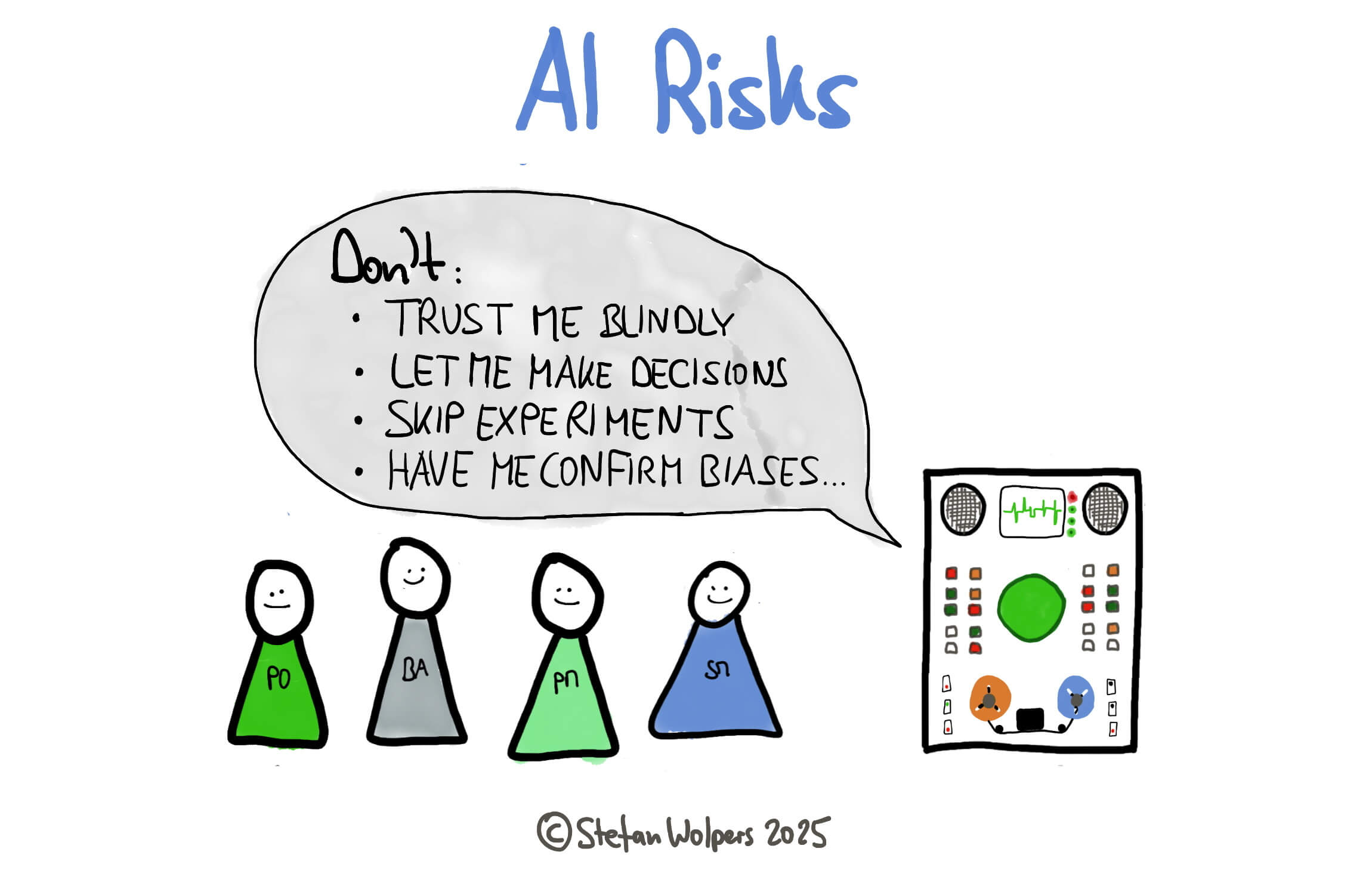

You remain accountable for product outcomes, not the AI. Here are three practices, ensuring that it stays that way:

- Against validation shortcuts: Maintain empirical validation of all AI recommendations. Treat AI output as hypotheses, not conclusions.

- Against vision myopia: Use AI to optimize execution, but maintain human judgment about direction. Ask: What is invisible to the AI because it is not in our data?

- Against human disconnection: Preserve direct customer contact and stakeholder engagement. Always question AI outputs, especially when they confirm your biases. (You can automate that challenge, too, by the way.)

The practitioners who thrive won’t be those who adopt AI fastest. They will be those who maintain the clearest boundaries between leveraging AI’s capabilities and outsourcing their judgment.

Watch the complete video to explore these risks in depth with additional examples and a discussion of what goes wrong when teams ignore these fundamentals.

Conclusion

The distinction between AI augmentation and judgment abdication determines whether you maintain accountability or quietly transfer product strategy to algorithms.

Your obligation remains unchanged: Validate empirically, engage directly with customers, and question outputs that confirm your existing biases.

What do you do to avoid Product Development AI Risks?

🗞 Shall I notify you about articles like this one? Awesome! You can sign up here for the ‘Food for Agile Thought’ newsletter and join 40,000-plus subscribers.