It's January 2026. The AI hype phase is over. We've all seen the party tricks: ChatGPT writing limericks about Scrum, Claude drafting generic Retrospective agendas. Nobody's impressed anymore.

Yet in many Scrum Teams, there is a strange silence. We see the tools being used, often quietly, sometimes secretly, but we rarely discuss what this means for our roles. There is a tension between the "automate everything with agents" crowd and those who worry about becoming obsolete.

For twenty years, I have watched organizations struggle with Agile transformations. The patterns of failure are consistent: they treat Agile as a process to be installed rather than a culture to be cultivated. They value tools over individuals and interactions. Today, I see the same pattern repeating with AI. Organizations buy tokens and expect magic. Practitioners wonder if their expertise is about to be automated away.

We need a different conversation.

The Work That Made You Visible Is Now Commodity Work

Let us name some uncomfortable things.

Drafting user stories. Synthesizing stakeholder notes. Summarizing workshops. Turning a messy Retro into themes. These were never the point of your job. But they were visible proof that you were doing something. AI changes that visibility.

If you are a Scrum Master who spends 20 hours a week chasing status updates and drafting emails, you are in danger. Not because AI will take your job, but because those tasks are commodity work. When drafting and summarizing become cheap, the only thing of value remaining is judgment, trust-building, and accountability.

Let us also name what many practitioners fear: AI replacing them. Not because they think they are unskilled, but because they have seen organizations reduce roles to checklists before. If your company once replaced "agile coaching" with a rollout plan and a set of events, why would it not replace a Scrum Master with a customized AI that generates agendas and action items?

It is a rational fear. It is also incomplete.

What the Research Shows

Harvard Business School researchers ran a field experiment with 776 professionals at Procter & Gamble. Individuals working with AI produced work that is comparable in quality to that of two-person teams. The researchers called AI a "cybernetic teammate."

People felt better working with AI than working alone. More positive emotions, fewer negative ones. This was not just about productivity. It was about how AI changes the work experience.

The research also showed that AI helped less-experienced employees perform at levels comparable to those of experienced colleagues. It bridged knowledge gaps. It allowed people to think beyond their specialized training.

This matters for Agile practitioners:

- If you have deep knowledge of Agile, AI lets you apply that knowledge faster and more broadly.

- If you lack that knowledge, AI amplifies your incompetence. A fool with an LLM is still a fool, but now spreading nonsense more confidently. (Dunning-Kruger as a service, so to speak.)

The tool is neutral. Your expertise is not.

Reference: Dell'Acqua, F., et al. (2025). "The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise." Harvard Business School Working Paper No. 25-043.

The Real Problem: Ad-Hoc Delegation

The struggle I see among practitioners is not access to tools. It is judgment about when to use them.

Product Owners paste sensitive customer data into public models. Scrum Masters use AI to write delicate feedback emails that sound robotic. Coaches delegate analysis they should have done themselves. Ad-hoc delegation produces ad-hoc results, and often unnecessary harm to people, careers, and organizations.

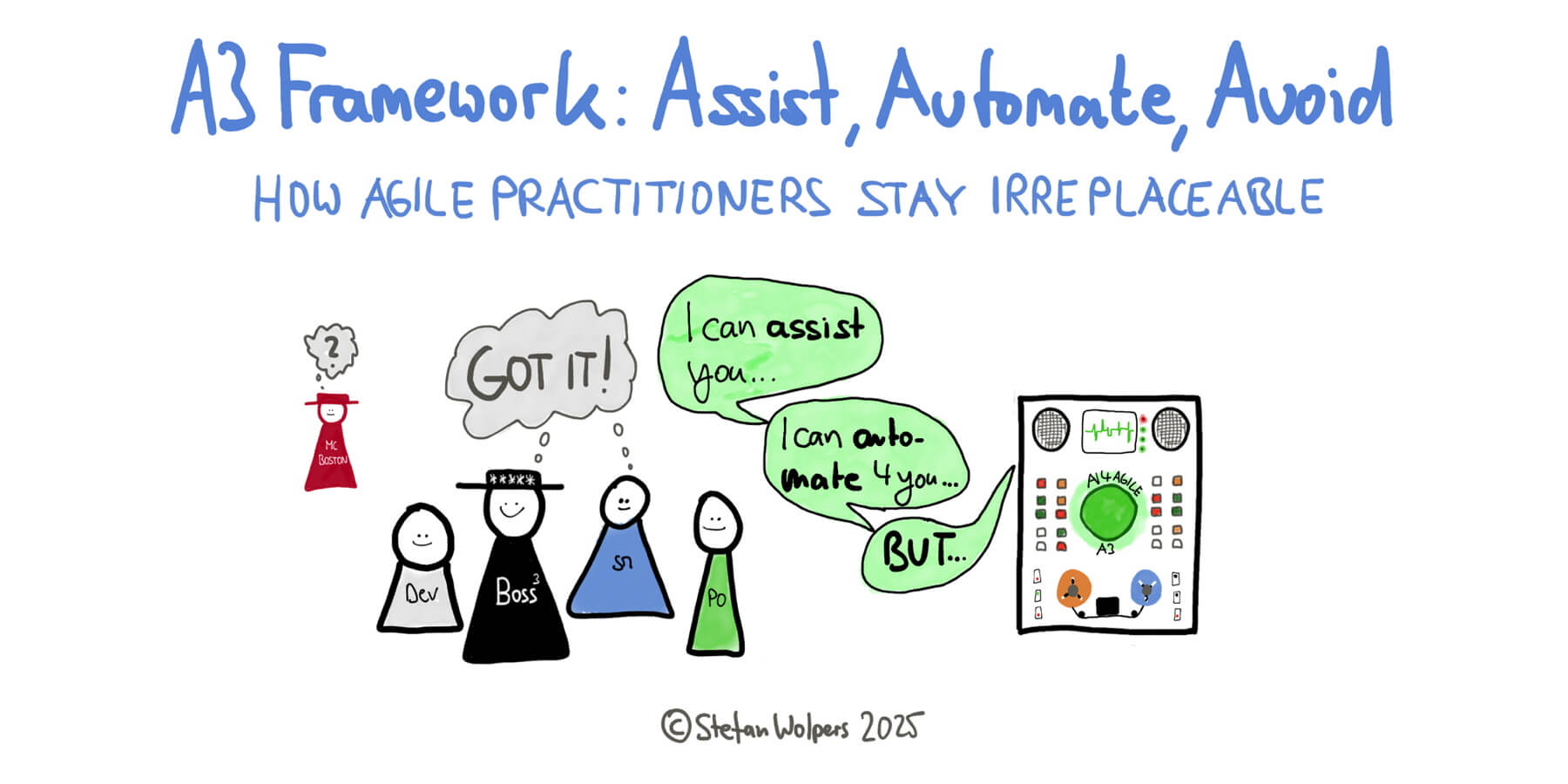

What is missing is a decision system. Before you type a prompt, categorize the task.

I find three categories useful:

Tasks where AI assists and you decide. AI generates options or drafts. You review, edit, and make the final call. AI handles the blank page; you supply the judgment. The failure mode: rubber-stamping output without genuine review.

Tasks where AI executes under constraints. AI handles the work, but you set rules, establish checkpoints, and audit results. Example: auto-summarizing a Daily Scrum with a "human review before publish" checkpoint. The failure mode: set-and-forget. Automation without monitoring becomes invisible drift.

Tasks where AI should not be involved. Too risky, too sensitive, or too context-dependent. Conflict mediation. Performance feedback. Sensitive stakeholder communication where the relationship is fragile. The failure mode: rationalization. Telling yourself AI will "just create a starting point" for delicate work.

The specific categories matter less than the habit. Categorize before you prompt. Without a system, every task you delegate to AI is a gamble.

From Secrecy to Shared Vocabulary

Categorization makes AI delegation discussable.

Right now, many teams have unspoken tension around AI use. People wonder who is using it, whether the work is "really theirs," and whether shortcuts are being taken. The questions feel accusatory: "Who used AI on this? Did you actually think about it?"

A shared decision system changes the conversation. Teams ask productive questions: "Which category is this task in? What checkpoints do we need? Who reviews the output?"

That shift, from secrecy to shared vocabulary, prevents AI use from becoming clandestine and keeps thinking visible.

AI Theater Looks Like Agile Theater

AI transformation will fail for the same reasons Agile transformation did: governance theater, proportionality failures, treating workers as optimization targets rather than co-designers.

You have seen this before. Impressive demos. Vanity metrics. No actual change in how decisions get made. "AI theater" is "agile theater" with a new coat of paint.

The Agile Manifesto still applies. Individuals and interactions matter more than processes and tools. AI is the ultimate tool. Our job is to use it to support individuals and improve interactions, not to let it become a process that manages us.

Conclusion: The Question Worth Sitting With

The cybernetic teammate is here. The question is how you will work with it: deliberately, with boundaries and judgment, or through ad-hoc habits that become invisible defaults.

When AI makes the artifacts cheap, will your judgment become more visible? Or will it turn out you were hiding behind the artifacts?

🗞 Shall I notify you about articles like this one? Awesome! You can sign up here for the ‘Food for Agile Thought’ newsletter and join 40,000-plus subscribers.