For all the talk about transformation, one question still goes largely unasked (and therefore unanswered!) across government: how do we know if any of this is working?

Departments deliver more digital services, automate workflows, and publish quarterly dashboards, yet value remains largely unmeasured. Delivery activity is reported as impact, milestones as outcomes, and completion as success. Somewhere along the line, we stopped asking whether what we built made a difference. We’re too busy working on the next thing before the last thing is even complete – sound familiar?

The uncomfortable truth is that government is brilliant at tracking work for billing and procurement, but poor at proving value. The obsession with throughput, what did we deliver, has replaced the harder, more important question: what changed for the people we serve? In product management training I frequently talk about Vision (we’ve definitely got that sorted), Value (sometimes, but we can’t prove it), and Validation (almost never); they’re called the 3 Vs.

Evidence-Based Management (EBM) offers a way to fix that.

Moving Beyond Output

Traditional measurement systems reward predictability, not progress – the questions you get asked are ‘when?’ but rarely ‘why?’. Delivery is tracked through status reports, RAG ratings, and milestones which are comfort metrics. They allow control for the PMO, but don’t track value or improvement. At the end of the day, if you can determine whether a product/project is successful simply by looking at the colour of an Excel cell, something has gone very wrong. Have you ever been on a Watermelon Project? It’s green on the outside, red on the inside!

Evidence-Based Management replaces this with a simple but powerful framework built around four Key Value Areas (KVAs)1:

- Current Value – What measurable value are we providing to users, departments, and taxpayers right now?

- Unrealised Value – What opportunities exist to deliver more impact with the resources or knowledge we already have?

- Ability to Innovate – How capable are we of adapting to change and creating new value?

- Time to Market – How quickly can we turn learning into working solutions that users can experience?

You can consider these four KVAs as lenses on whether public money is creating meaningful outcomes (to be clear, an outcome is a behaviour change). Imagine a team that you’ve bought for 12-months; they’ve delivered 35 story-points each Sprint – how much value does that equate to? You don’t know – fact. Let’s stop confusing activity with achievement. The point of delivery is not to ship faster; it is to learn faster, and learning requires measurement that reflects reality, not reassurance.

Reporting to Learning

The UK’s public sector has never been short on data, the issue is what it chooses to do with it. Too often, metrics are collected to justify funding and not to inform the future delivery. If you don’t believe me, I’d bet that the last time you were asked to justify value was associated, in some way, with a new funding round. Was I right? Business cases and lessons-learned sessions (also known as LNLs, Lessons Never Learned) become ceremonial and without purpose, with output knowledge stored in Confluence where it will promptly die.

Evidence-Based Management reframes metrics as a feedback mechanism. It encourages teams to treat every assumption as a hypothesis that must be tested with evidence, not opinion or confidence, but measurable change. If you want specifics on how to achieve this, look at Strategyzer cards or even Business Problem Statements to help.

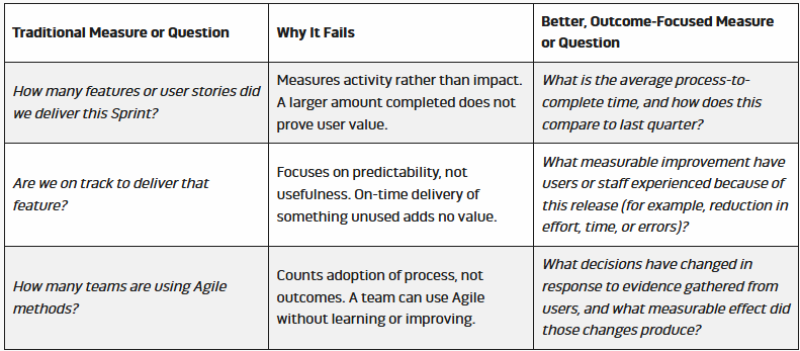

Let’s take a look at some typical measures or questions asked by department leadership and how they could be improved to place value at their centre:

Connecting Strategy to Delivery

The real test of value measurement is whether it links strategy to delivery because at present, those two worlds often operate on parallel tracks. UK Treasury speaks in terms of benefits realisation2, localised department talk about delivery programmes, and delivery teams measure Sprint velocity, but none of these connect directly to outcomes.

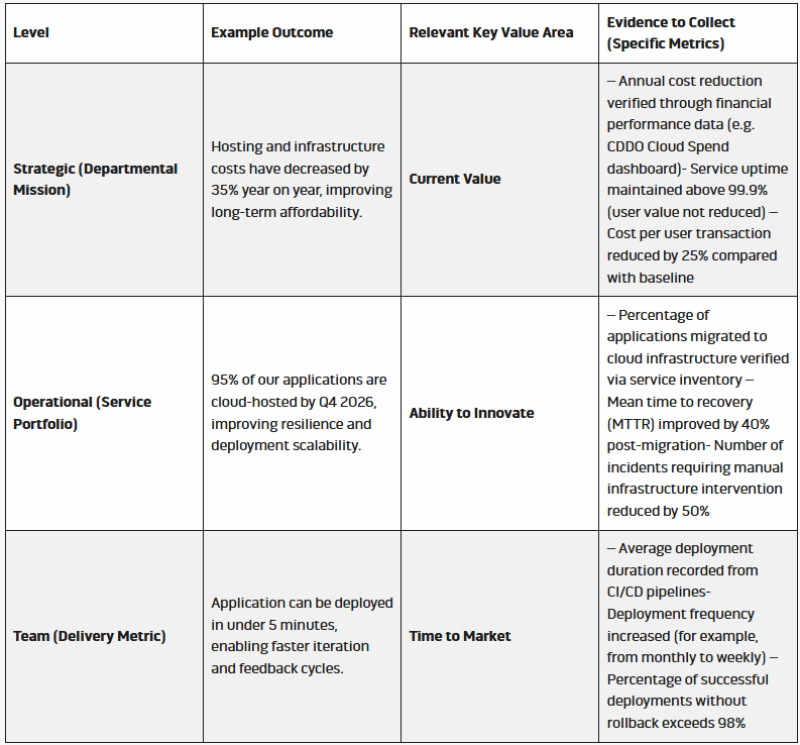

EBM closes that gap through evidence loops. It encourages a thread from mission to metric:

Without excuse, every team should have Product Goals or OKRs underpinned by evidence-based, key-value metrics (KVMs) that your board understand. If we can connect each team to the overarching mission, then measuring value for portfolio builds or annual funding requests becomes much easier.

What can you do about it?

- Embed outcome reviews, not status updates; they are not the same! Quarterly reviews should discuss impact trends, not traffic-light colours.

- Creating value transparency. When software teams explain things in technical ways, hold them accountable in changing the way they explain value.

Conclusion

Evidence-Based Management gives government a framework for honesty and start measuring change with empirical learning. The departments that master it will not just deliver faster; they will deliver smarter. They will understand what is working, what is not, and why3. That is what value really looks like, proven, not presumed. Don’t allow software teams to continue focusing on delivery – start focusing on value.

References

- Scrum.org, Evidence-Based Management Guide (2024) – https://www.scrum.org/resources/evidence-based-management

- HM Treasury, Public Value Framework (2022) – https://www.gov.uk/government/publications/public-value-framework-and-supplementary-guidance

- OECD, Measuring Public Value in Government (2024) – https://www.oecd.org/gov/measuring-public-value-in-government.htm