In 2024, a "3-point" story typically represented X amount of human effort. In 2026, an AI agent can generate the code for that same story in 4 seconds.

This creates a crisis for Agile measurement. If your team starts using Copilot or Cursor, and their Velocity jumps from 50 to 5,000 in one Sprint, have they actually delivered 100x more value? No. They have simply broken the metric.

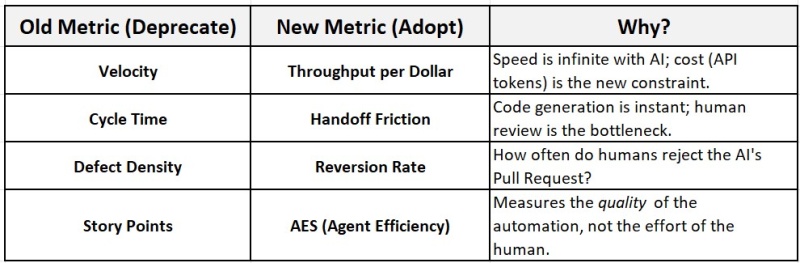

Velocity has always been a proxy for effort. But when AI reduces the drafting effort to near-zero while leaving the verification complexity high, Velocity becomes a vanity metric (though it was earlier too)

To maintain Empiricism, we must retire Velocity in favor of metrics that measure the friction between your silicon workforce (Agents) and your carbon workforce (Humans).

Here are the two new Key Performance Indicators (KPIs) for the Agentic Era.

This article was originally published by ALDI

1. Agent Efficiency Score (AES)

In Evidence-Based Management (EBM), we look for "Ability to Innovate." If your humans are spending all their time fixing bad AI code, their ability to innovate drops.

Agent Efficiency Score (AES) measures the reliability of an AI agent. It answers the question: "Is this tool actually saving us work, or is it just creating noise?"

The Formula:

AES = Tasks Completed Autonomously / (Total Tasks Assigned + (Human Interventions × Complexity Penalty))

How to Inspect It:

High AES (80–100): The agent acts like a "Senior Developer." It takes a PBI, executes the task, passes unit tests, and the Pull Request (PR) is merged with zero human edits.

Low AES (<50): The agent is a "Junior Intern." It generates output quickly, but a human spends hours rewriting it.

The Adaptation: If an agent's AES drops below 40, the Scrum Team should inspect this in the Retrospective. The solution might be to fire the agent for that specific workflow and revert to human crafting.

2. Human-Agent Handoff Time (The "Context Tax")

In traditional flow metrics (Kanban), we measure Cycle Time. In the AI era, the "coding" cycle time is instant. The delay now lives entirely in the Handoff.

Human-Agent Handoff Time is the time elapsed between an AI Agent signaling "I am stuck, please review" and a human successfully resuming the work.

The Scenario:

09:00 AM: Agent starts a refactor.

09:05 AM: Agent hits ambiguity and pauses, tagging a Senior Engineer.

02:00 PM: Engineer sees the tag.

02:30 PM: Engineer spends 30 mins reading logs to understand context.

Handoff Time: 5.5 Hours.

This metric exposes the "Context Switching Tax." To improve Flow, Scrum Teams must design "Warm Handoffs", where the agent provides a concise summary of the conflict, allowing the human to decide in minutes, not hours.

The 2026 EBM Dashboard

To measure value in 2026, we need to swap our "Activity Metrics" for "Outcome Metrics."

Measure Value, Not "Points"

Agile has never been about points. It has always been about the sustainable delivery of value. As AI changes how we work, we must change how we measure that work. If we cling to Velocity, we are lying to ourselves about our productivity.

Join the Conversation

Are "Story Points" dead? We will be debating the future of Agile Metrics at Agile Leadership Day India 2026 on February 28, 2026, in Noida. Join us to build the dashboard of the future.

Join Bow - ALDI 2026