If you’ve been around long enough to witness the rise of Scrum and Agile, there’s probably a strange sense of déjà vu in today’s AI conversations.

Back then, Agile was supposed to make organizations more adaptive, more customer-focused, and more humane. What happened instead in many places was something very different. Frameworks were rolled out without leadership buy-in. Teams were told to “be Agile” while still being measured in the same old ways. Executives spoke in strategy decks while teams spoke in Sprint Backlogs. Change management was an afterthought. And worst of all, practices were copied because “everyone else is doing it,” not because they solved an actual problem. "Change is for thee, not for me." In other words, there was a lot of finger-pointing going on and little actual progress.

Sound familiar?

Fast forward to today, and AI is walking straight into the same traps. We see AI initiatives launched without clear sponsorship from leadership (let alone alignment). We see executives talking about “AI strategy” while teams experiment with tools that don’t connect to any real business outcomes. We see different layers of the organization speaking entirely different languages (technical, operational, strategic) without a shared narrative. We see AI tools introduced with minimal thought about long-term implications, ethics, or organizational impact. And we see a lot of hype-driven adoption: chasing trends instead of solving problems.

None of this is new. We’ve just changed the buzzwords. And that’s exactly why many AI implementations are already showing the same dysfunctions *insert buzzword here* struggled with a decade ago.

Why Repeating These Patterns with AI Is Even More Dangerous

Something poetically beautiful, yet undervalued, is this: AI amplifies whatever organizational patterns already exist.

The poetic part lies in the fact that Scrum did the same thing, yet many organizations deliberately chose to ignore it because it was uncomfortable. Guess why you see all these nonsensical Scrum Is Dead articles?:)

When Agile was misapplied, the damage was often limited to inefficiency, frustration, or cynicism. Painful, yes, but survivable. AI is different. AI scales decisions, automates judgment, and embeds assumptions into systems that can affect customers, employees, and society at large. The impact of this new era of change is a lot higher than we've seen before.

So when AI is implemented without leadership buy-in, it doesn’t just stall, it fragments. When different layers speak different languages, AI doesn’t just confuse; it misaligns incentives and outcomes. When change management is ignored, resistance doesn’t just slow adoption; it pushes AI into the shadows. And when implications aren’t thought through, organizations don’t just make mistakes; they create risks they can’t easily undo. And before we know, we're pointing fingers at AI again. Its current promise seems (to me at least) to be higher than the actual ROI.

Perhaps the most familiar dysfunction of all is mindlessly following hype. Agile was once interpreted as a silver bullet. AI is now marketed the same way. Tools promise productivity gains, automation, and competitive advantage with minimal effort. But as many leaders painfully learned with Agile, copying practices without understanding context rarely leads to transformation.

AI does not magically fix broken processes.

It does not compensate for an unclear strategy.

And it absolutely does not replace leadership thinking.

If anything, it demands more of it. So, we need to slow down a bit before throwing wet AI spaghetti against the wall, hoping something will stick.

Why a More Mindful Approach Is No Longer Optional

What both Agile and AI have taught us, sometimes the hard way, is that transformation doesn’t fail because of frameworks or tools. It fails because organizations underestimate the importance of intentional leadership.

A mindful approach to AI doesn’t mean being slow or being cautious for the sake of it. It means being deliberate. It means pausing long enough to ask better questions before making irreversible decisions. It means recognizing that AI is not an IT initiative, not an innovation side project, and not something you “delegate away.”

Mindful AI leadership starts with acknowledging complexity (huh, we've seen this before, haven't we?). Organizations are systems. Decisions ripple. Incentives matter. Culture matters. And technology, especially powerful technology, should be introduced with awareness of how it will interact with all of that.

Problem-thinking over solution-implementation

Guided change over fast rollout

Identify gaps between current and desired situation (and where AI might help us out)

Design your own strategy over copying others

This shift, from reactive adoption to thoughtful design, is the difference between repeating old Agile mistakes and actually learning from them. The interesting thing is that this is not at all new, yet we (as an industry at large) seem to falling for the same recurring yet destructive patterns again and again.

Introducing the AI Strategy Canvas: A Leadership Tool, Not a Technical One

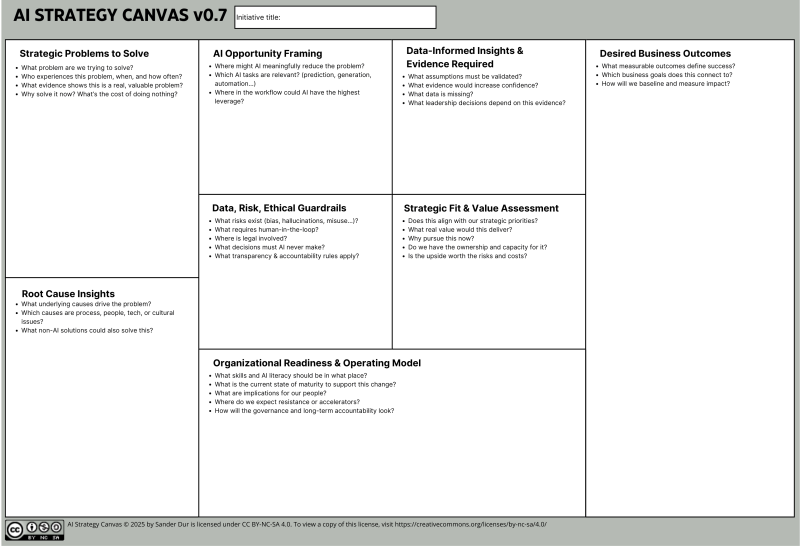

One of the biggest lessons from Agile is that frameworks work best when they support thinking, not replace it. That insight is what led to the creation of the AI Strategy Canvas.

The canvas isn’t a checklist. It’s not a maturity model. And it’s definitely not a tool-selection guide. It’s a thinking framework for leaders, a way to structure conversations that too often stay vague, political, or disconnected. At its core, the canvas forces organizations to slow down at the right moments.

It starts with defining the strategic problem, not the solution. Solutions come later. This alone eliminates a large percentage of hype-driven AI initiatives. If a problem isn’t strategically relevant, AI shouldn’t touch it.

It then pushes leaders to examine root causes. This is where many Agile transformations went wrong: treating symptoms instead of systems. The canvas explicitly asks leaders to look beneath the surface and understand why the problem exists in the first place.

From there, it guides leaders to articulate desired business outcomes. Not activities. Not outputs. Outcomes. "Where do we want to be" is the main question. This creates a shared language across organizational levels, something Agile often promised but failed to deliver in practice.

Only after this groundwork does the canvas invite AI into the conversation. Not as a magic solution, but as a possible means, one of several, to address a well-understood problem. This reframes AI from “the thing we’re doing” to “the thing that might help.” Like Scrum, AI is a tool. A very powerful one, yes, yet still a tool. So if we don't properly understand how these tools might help us, we should not do it.

Crucially, the canvas also integrates data realities, risk, and ethics into the strategy itself. Not as a compliance checkbox at the end, but as a fundamental part of deciding whether something should exist at all. This is where AI differs sharply from earlier transformations: the cost of getting this wrong is simply too high.

It then asks leaders to assess organizational readiness and operating models. Who owns this? Who is accountable? How does this live beyond a pilot? These are exactly the questions Agile often postponed, and paid for later. This fosters a more organization-wide approach, rather than a team focus.

Finally, the canvas ends with a strategic fit and value assessment. This is the moment of truth. Does this initiative truly deserve attention right now? Does it align with the long-term direction? Is the value worth the disruption? If not, the most mindful decision may be to not proceed at all. Ideally, I see this strategy combined with an experiment set up and a communication plan to ease the change into the organization, rather than forcing it down people's throats.

And that, paradoxically, is often the most strategic move.

Conclusion: Learning from the Past to Lead the Future

AI doesn’t have to repeat the mistakes of Agile. But avoiding them requires humility, reflection, and leadership that’s willing to say, “We need to think this through.”

We already know what happens when transformations are driven by hype, disconnected language, and insufficient leadership ownership. We’ve lived through it. Many organizations are still recovering from it. AI gives us a second chance, not to be perfect, but to be more intentional. The AI Strategy Canvas exists to support that intention. It’s a tool to help leaders create clarity where there is noise, alignment where there is fragmentation, and strategy where there is currently improvisation.

If you’re serious about avoiding the same dysfunctions, about leading AI in a way that actually supports your goals rather than undermining them, this is a good place to start.

You can find and download the AI Strategy Canvas right here.

Use it to ask better questions.

Use it to slow down before you speed up.

And most importantly, use it to lead AI with the kind of mindfulness we wish we had applied with the other hypes before.